AI is behind more of the content we read than most people realize. Essays, blog posts, emails — many of them are written or fine-tuned by tools like ChatGPT. And while that might save time, it also raises questions.

Because not everyone expects AI to be involved. In schools, workplaces, or publishing, people still care about who wrote what. Was it a student’s own work? A candidate’s real voice? A writer’s authentic take?

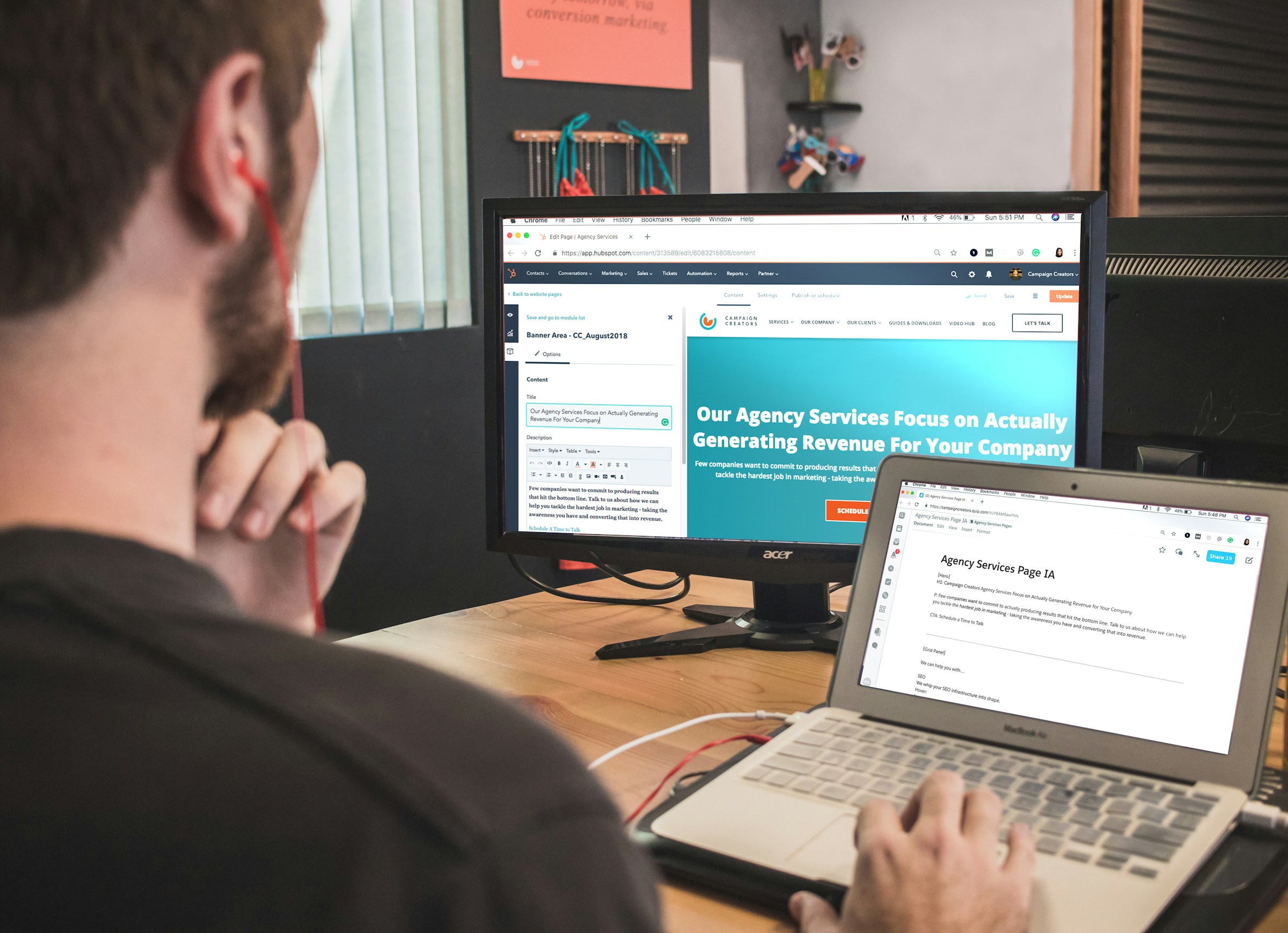

That’s where AI essay detectors step in. Their job is to figure out whether a piece of writing came from a human mind or a machine. Sounds straightforward, but it’s not. Spotting the difference can be like telling a hand-painted portrait from a digital copy — it takes a sharp eye and the right tools. For teachers, editors, and employers, these tools offer a way to make better, more informed decisions.

How AI detectors actually work

They don’t “know” in the way people do. Instead, they look for patterns — the kind of patterns that make a text feel a little too perfect. Tools like Smodin’s ai checker are designed to spot those signals quickly, giving you a snapshot of whether something might be AI-written.

Think of it like this: a teacher who’s read dozens of papers from the same student can usually tell when something’s off. The tone shifts, the vocabulary jumps a level, or the flow suddenly becomes smooth in a way it never was before. AI detectors work the same way, only they use data and algorithms instead of gut instinct.

They scan for signs like:

- Sentences that follow predictable patterns;

- A tone that stays unnaturally even throughout;

- Repetition of certain words or phrases;

- A lack of personality or detail that feels “human.”

Some detectors even use AI models of their own to compare the text against what’s typical for machine-written content.

Are these detectors actually accurate?

They’re good — but not flawless. Sometimes they misfire. A completely human-written essay might get flagged, and a clever AI-generated one might pass as original. It’s a bit like using a spam filter: most of the time it gets things right, but now and then, something unexpected slips through.

Why does that happen? Because AI is learning fast. It’s getting better at sounding human — adding casual phrases, changing rhythm, even throwing in small errors on purpose. And as AI improves, detectors are constantly adjusting to keep up.

Still, that doesn’t mean they’re useless. Far from it. They’re best used as an early warning system. If a detector raises a red flag, take a closer look — don’t jump to conclusions. It’s a starting point, not the final word.

Real-life situations where detection matters

This isn’t just a theoretical concept. AI detectors are already part of daily workflows — used when something in a piece of writing just doesn’t sit right, even if you can’t quite explain why.

Imagine a professor, deep into grading stacks of student essays. Most are familiar — solid, flawed, human. But one jumps out: clean, structured, strangely neutral. It doesn’t feel like the student’s voice. Instead of guessing, the professor pastes a few lines into a detector. The result? High AI likelihood. That doesn’t mean automatic failure, but it starts a conversation — maybe the student used AI to brainstorm, maybe they leaned on it too much. Either way, it’s a chance to talk about learning, effort, and where tech fits in.

Or think about a content manager reviewing drafts from a writing team. Each writer has their style. But one article? Feels flat. Too formal. Too… machine-like. A quick scan confirms the suspicion: high AI probability. That’s not an instant “no,” but it might call for a revision — or just a nudge to the writer to add their own voice back into the mix.

Same goes for hiring. A recruiter reads a cover letter that checks every box — but feels oddly generic. A detector helps flag that it might not be 100% original. That’s useful information when deciding who to call in for an interview.

Here’s where AI detectors prove especially helpful:

- When you need a second opinion before making a decision;

- When a piece of writing feels off, but you can’t quite put your finger on why;

- When originality matters — like in academic work, journalism, or hiring;

- When content seems too polished or too neutral to feel personal;

- When quality control depends on tone, voice, and authenticity.

Across the board, these tools don’t replace human judgment — they sharpen it. They shine a light where something might need a second look. And in a world overflowing with digital content, that’s more than helpful — it’s necessary.

Can AI outsmart the detectors?

It’s starting to feel like a high-stakes game of tag. AI writes. Detectors catch. AI writes smarter. Detectors catch smarter. And so it goes.

Some newer AI models are already trained to “sound human.” They mix up sentence lengths, add emotional cues, even toss in small mistakes on purpose — all to slip under the radar. Some people even use prompts specifically designed to beat detection tools.

But here’s what AI still struggles with: messy, raw, human nuance. The kind of writing that comes from real experience, sudden inspiration, or emotional reaction. It’s not just about sentence variety — it’s about meaning, timing, instinct.

Can AI fool the system? Yes, sometimes. But not always. And certainly not forever.

Final thoughts: detectors are tools, not verdicts

AI detectors aren’t here to police creativity. They’re here to provide insight. Like autocorrect or grammar checkers, they help us be more aware of what we’re reading — and writing.

The real point isn’t to “catch” someone using AI. It’s to understand how, when, and why AI was used. Did it support the writer’s process, or replace it? Was the final result reviewed and refined by a human? That’s the kind of context that matters more than the yes/no result on a detection tool.

As AI becomes a more natural part of how we write, the real skill will be knowing when authenticity matters — and being able to recognize it when we see it.